The other day I was trying to search some xml documents that included embedded chemical structures. I also wanted to search for other data associated with the xml documents which included large sections of textual data. Given that these document also represented test sets that I was using for some of my unit tests it was a safe bet that I would need to search the documents several times for different information. I decided to write a small set of utilities that would allow me to find the information that I wanted.

I thought about loading the documents into a databases but decided that this was way too much overhead for what seemed like a simple task. As I have also been thinking about indexers and document crawlers recently I looked at enabling the Lucene search engine library to structure search the documents.

First I create a wrapper class that retrieved the information I wanted to index from the xml document. The xml file contents is loaded into an xml.Document object and then I used XPath expressions to return the information. In line 33 the doc object is the xml document containing the xml from the file.

public class FileParser {

private static String XPATH_MOLFILE = "//Molecule";

/**

* Constructor

*/

public FileParser() {}

/**

* Loads the file contents

* @param file the file to load <br />

* @throws IOException <br />

* @throws SAXException <br />

* @throws ParserConfigurationException <br />

*/

public void LoadFile(File file) throws IOException, SAXException, ParserConfigurationException {

xml = readFileContents(file);

DocumentBuilderFactory factory = DocumentBuilderFactory.newInstance();

factory.setNamespaceAware(true);

DocumentBuilder builder = factory.newDocumentBuilder();

doc = builder.parse(new InputSource(new StringReader(xml)));

xPathfactory = XPathFactory.newInstance();

init = true;

}

/**

* Returns the molfile from the document

* @return the molfile

* @throws Exception

*/

public String getMolfile() throws Exception{

String ret = null;

if(init){

XPath xpath = xPathfactory.newXPath();

XPathExpression expr = xpath.compile(XPATH_MOLFILE);

Object result = expr.evaluate(doc, XPathConstants.STRING);

ret = result.toString();

}else{

throw new Exception("Not init");

}

return ret;

}

Once I had abstracted the code to return the content from the xml file I implemented code to generate the Lucene document that would contain the information that I wanted indexed.

public static Document Document(File f) throws java.io.FileNotFoundException {

// make a new, empty document

Document doc = new Document();

try {

FileParser parser = new FileParser();

parser.LoadFile(f);

doc.add(new Field("path", f.getPath(), Field.Store.YES, Field.Index.NOT_ANALYZED));

doc.add(new Field("id", parser.getID(), Field.Store.YES, Field.Index.NOT_ANALYZED));

System.out.println(parser.getID());

String nemaKey = MoleculeUtils.generateNemaKey(parser.getMolfile()).toLowerCase();

System.out.println(nemaKey);

doc.add(new Field("nemakey", nemaKey, Field.Store.YES, Field.Index.NOT_ANALYZED));

String sssKeys = MoleculeUtils.generateSSSKey(parser.getMolfile());

System.out.println(sssKeys);

doc.add(new Field("ssskey", sssKeys, Field.Store.YES, Field.Index.ANALYZED));

doc.add(new Field("modified",

DateTools.timeToString(f.lastModified(), DateTools.Resolution.MINUTE),

Field.Store.YES, Field.Index.NOT_ANALYZED));

doc.add(new Field("contents", new FileReader(f)));

}

catch(Exception e)

{

e.printStackTrace();

}

// return the document

return doc;

}

To enable the exact and substructure searching I needed to generate the structure keys that I could index. To generate these keys I used Cheshire to generate a NEMAKey for exact searching and SSS keys for substructure searching.

public class MoleculeUtils {

public static String generateNemaKey(String molFile) throws UnsatisfiedLinkError, CheshireException{

String ret = null;

Cheshire cheshire = new Cheshire();

cheshire.setTargetChemicalObject(molFile);

if(cheshire.runScript("List(M_NEMAKEYCOMPLETE)"))

{

ret = cheshire.getScriptResult();

}

return ret;

}

public static String generateSSSKey(String molFile) throws UnsatisfiedLinkError, CheshireException{

String ret = null;

Cheshire cheshire = new Cheshire();

cheshire.setTargetChemicalObject(molFile);

if(cheshire.runScript("SSKeys(SSKEYS_2DSUBSET, SSKEYS_INDEX, ' ')")) {

ret = cheshire.getScriptResult();

}

return ret;

}

Then once the structures within the files are converted and indexed I wrote a small command line utility that allowed me to search the files. When I enter a structure into the utility it converts the structure into the same key format as the indexer generated and then lets Lucene handle the string comparison.

Even for the relatively small data set the time saved in being able to find the test sets that I wanted has more than made up for the time taken to write the tools.

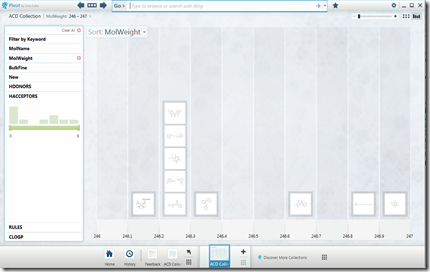

You could imaging that the same approach working for crawling large document datasets, generating indexes that are split across multiple machines then searching across those machines to generate a full result set of documents that match the search. Perhaps also providing a simple front end that allowed the user to enter structures via a drawing tool (sounds like the start of a series of blogs).